Ali Salman

Machine Learning Engineer

Meta

Hi! I’m a Machine Learning Engineer at Meta, working with the MGenAI team. Before joining Meta, I spent a few years at Zonda, developing models to track construction activities across the U.S. using satellite imagery. Prior to that, I interned at Uber ATG in San Francisco as a software engineer, working at the intersection of machine learning and prediction for autonomous driving.

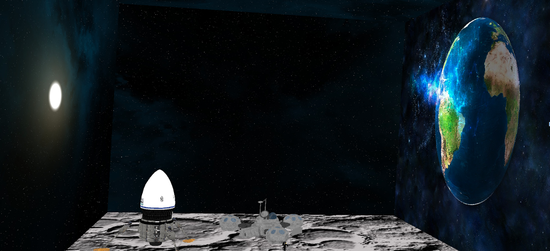

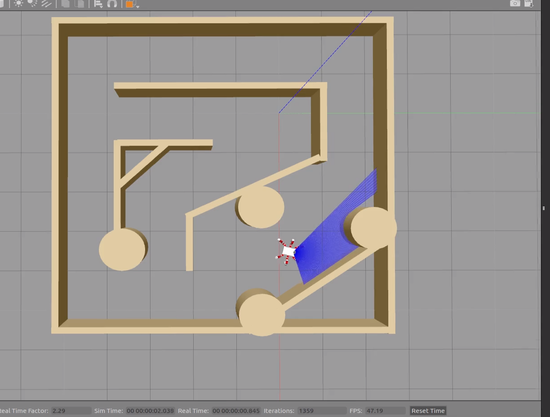

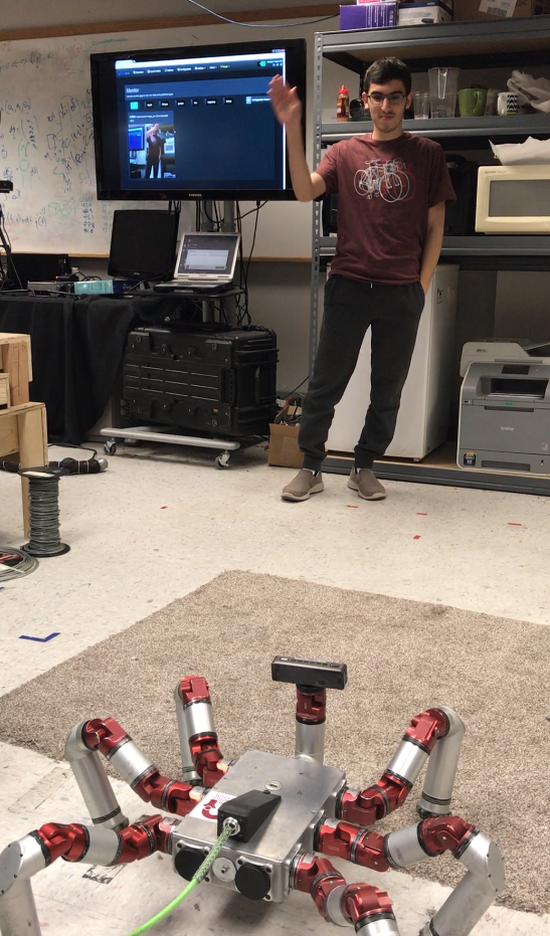

I hold a Master’s in Computer Science, specializing in Computer Vision and Robotics, from Grenoble INP - ENSIMAG. Before that, I earned a B.E. in Mechanical Engineering from the Lebanese University.

Interests

- LLMs

- GenAI

- Computer Vision

- Robotics

Education

MSc in Computer Science, 2020

Grenoble INP - ENSIMAG

BE in Mechanical Engineering, 2018

Lebanese University